A beginner's guide to AWS best practices

I was recently asked to run a session on “AWS Best Practices” with some engineers who were starting their careers at Instil.

The topic itself is huge and would be impossible to distil down to just a few points, so this post covers a few of the obvious choices and how you can find out more information.

Security

This should be the obvious first choice. When you are developing cloud applications, security should be at the very forefront of your mind. Security is a never-ending game of cat and mouse, it cannot be a checkbox exercise that is marked as “done”, as your application evolves so must your security posture. Here are a few key points to consider from the outset.

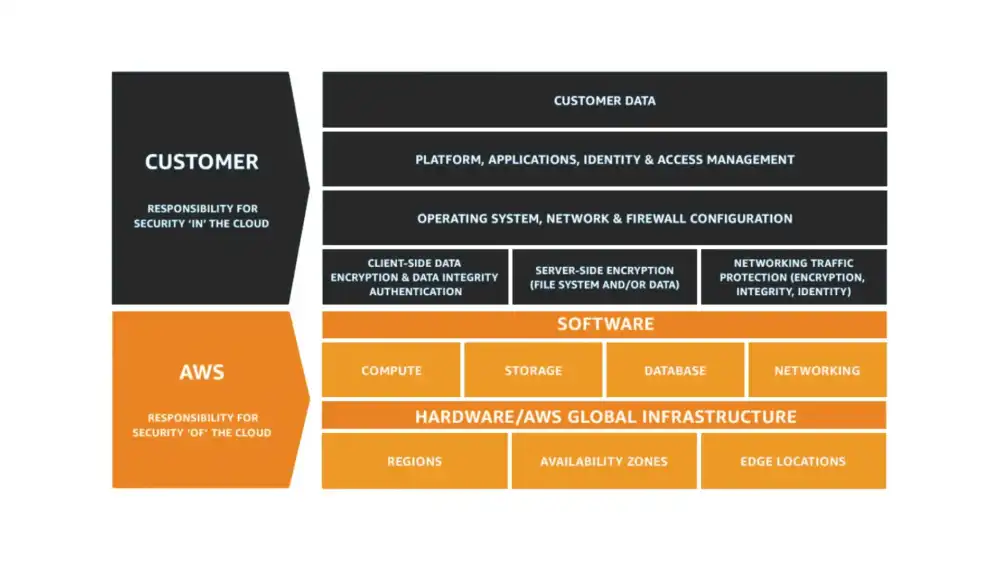

Understand the Shared Responsibility Model

Thankfully with AWS, part of the security burden has already been taken care of for you. This is explained with what AWS call the “Shared Responsibility Model”.

This is usually summarised down to:

AWS responsibility is the “Security of the Cloud”, the customer responsibility is “Security in the Cloud”

This is an important concept to understand. You can minimise your responsibility by choosing Serverless or managed services, but there will always be some level of responsibility as a customer of AWS.

Secure your Root Account

This might not be something you ever have to do, normally account creation will be taken care of by your organisation (and would hopefully be automated) but I thought it was worthwhile sharing as you might be setting up a personal AWS account for some training, experimentation or even for certifications.

When setting up a brand new AWS account you will start by creating a “root user”. It can be tempting to then start using this user for deploying and managing your application, however the root user has “the keys to the kingdom” - if it is compromised in any way there is the potential to lose everything in that account, rack up an enormous AWS bill and potentially expose further information about your organisation.

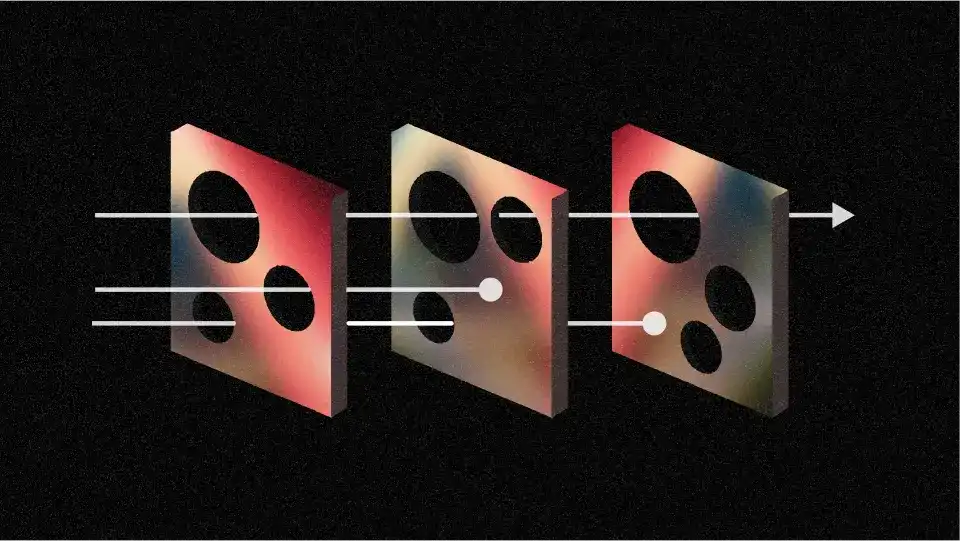

While securing your root account isn't a magic bullet, you should consider it part of the “Swiss cheese” approach to security:

The Swiss cheese model of accident causation illustrates that, although many layers of defense lie between hazards and accidents, there are flaws in each layer that, if aligned, can allow the accident to occur. In this diagram, three hazard vectors are stopped by the defences, but one passes through where the "holes" are lined up.

AWS Community Builder Andres Moreno has written a great post that covers the recommended settings for your root account, which they have summarised as:

- Do not create access keys for the root user. Create an IAM user for yourself with administrative permissions.

- Never share the root user credentials.

- Use a strong password. (Use a password manager if possible)

- Enable multi-factor authentication.

I would also add that these steps should be followed for any subsequent account creations and enforced as well.

In a multi account setup, AWS automatically creates a strong password and does not give you details of that password by default specifically to discourage use of the root user. You can then use SCPs to deny all actions for the root user in a member account.

Principle of least privilege (POLP)

While securing your account is an important first step in setting up your infrastructure, the Principle of least privilege is an important aspect to consider during the entire software development lifecycle.

The idea here is that a principal should only be able to perform the specific actions and access the specific resources that is required for it to function correctly.

More info can be found in the Well-Architected Framework.

Practically speaking, Infrastructure as Code (IAC) frameworks like CDK (Cloud Development Kit) make this very easy to implement. In fact it’s considered a best practice of CDK itself to use their built in grant functions. For example:

This single line adds a policy to the Lambda function's role (which is also created for you). That role and it's policies are more than a dozen lines of CloudFormation that you don't have to write. The AWS CDK grants only the minimal permissions required for the function to read from the bucket.

Handling Secrets

It is highly likely that you will have to handle some kind of secret when developing your application. To quote Corey Quinn:

Let's further assume that you're not a dangerous lunatic who hardcodes those secrets into your application code.

AWS gives two sensible options - Systems Manager Parameter Store or AWS Secrets Manager. There are pros and cons to both options, but understand that Parameter Store is just as secure as Secrets Manager, provided you use the `SecureString` parameter type. More info here.

In addition to choosing your secret service (pun intended) here’s a few more pointers:

- Use your IAC framework to create and maintain secrets for you.

- Ensure that secrets are not shared across environments.

- Never store secrets in plaintext on developer machines.

- Never include secrets in log messages. (You can configure Amazon Macie to automatically detect credentials)

- Rotate your secrets where possible, and again let the services handle this for you.

Encryption

When handling sensitive data it’s important to protect it. One technique you can use is encryption, both while the data is in transit and at rest. It may surprise you that for many services like S3 and DynamoDB, encryption at rest is disabled by default.

Thankfully using tools like cdc-nag you can help enforce some best practices around this and enable encryption from the beginning.

Cost

It’s becoming increasingly apparent that cloud developers need to understand the cost of the changes they are making. AWS provide a number of tools to help you analyse the cost of your application when it is up and running. However, cost should also be factored into the design stage of the development process as well.

Cost has become such an important factor in architecting cloud applications that it featured heavily in Amazon CTO Werner Vogels’ keynote at re:Invent 2023. In it he shared The Frugal Architect which contains some "Simple laws for building cost-aware, sustainable, and modern architectures."

When we talk about cost, yes we should consider the cost of the service itself, but we should also consider the Total Cost of Ownership as well.

-

Familiarise yourself and learn how to read the pricing tables of various services on AWS.

-

Create budget alerts to avoid unexpected bills.

-

Include cost optimisation as part of your design stage.

-

Get comfortable with using Cost Explorer - when costs spike it's important to understand how to drill down into costs.

Serverless First

Serverless has grown to mean many things and can even mean something different depending on whose cloud you are paying for. But for our team and for the purpose of this article, serverless is a way of building and running applications without having to manage the underlying infrastructure on AWS.

Of course there are still servers running in a data centre somewhere but those servers are not our responsibility. With Serverless we as developers can focus on our core product instead of worrying about managing and operating servers or runtimes.

By choosing “Serverless” or “Managed Services” as much as possible we allow AWS to do the “undifferentiated heavy lifting” for us. These are things that are important for the product to function but do not differentiate it from your competitors.

The benefits of Serverless are clear:

- No infrastructure provisioning or maintenance

- Automatic scaling

- Pay for what you use

- Highly available and secure

What we don't want to be is dogmatic, it’s very tempting to be “Serverless Only”, however in certain circumstances this can lead to increased complexity (for no reason) and increased cost (especially at a certain scale).

Serverless first means to treat it as the first step, use it to make a start and iterate quickly but don't be sad if it’s not a good fit for certain customers or workloads.

Furthermore it’s important to treat any decision you make as a “two way door”, architecture decisions are never final, if something doesn't work or starts to cost too much, change it!

Master the basics

It’s entirely possible that you land on a Serverless project and avoid having to do some of the undifferentiated heavy lifting we have already eluded to. But this doesn't mean you should be ignorant to it. Networking concepts and core AWS services should be understood, even if they are not part of the “Serverless” offering.

AWS Certifications are a great way to be exposed to these kinds of topics. Check out my colleague Tom’s post for more info) but as a start I cannot recommend the free “Tech Fundamentals” course by Adrian Cantrill enough. It is not AWS specific but covers fundamental knowledge that will help you design, develop and debug applications in the cloud.

Automate everything

We’ve mentioned Infrastructure as code a number of times already. Using IaC frameworks ensures that we have consistency and repeatability in resource provisioning and management. While you might not be dealing with physical servers, I find the Snowflake Server concept a really nice way of reminding myself why we spend time automating deployments.

Snowflakes are unique:

good for a ski resort, bad for a data center.

We’ve got quite a bit of experience using CDK across a number of projects, so I would recommend it as a great place to start. There are a number of posts on our blog which help cover some fundamental aspects:

- CDK Lessons learned - https://instil.co/blog/cdk-lessons-learned/

- Improving the CDK development cycle - https://instil.co/blog/improving-cdk-development-cycle/

An added benefit of CDK is that there are plenty of ready made constructs and patterns for you to re-use on your projects.

Well-Architected Framework

The Well-Architected Framework could be considered “the mother of all AWS best practice guides”. It breaks down what a well architected application looks like using 6 pillars:

- Operational Excellence

- Security

- Reliability

- Performance Efficiency

- Cost Optimisation

- Sustainability

While I hope you have found this post useful, I would recommend you use it as a start, and then branch into the Well-Architected Pillars for further reading and then apply what you have learnt to your projects.

There is also a Well-Architected Tool that can help you assess your application against these pillars. It’s important to note that this is not a “checklist” and you should not aim for a “perfect score”. Instead it should be used as a guide to help you identify areas of improvement.