In early 2021, we embarked on an ambitious project to build a car insurance platform from scratch using Serverless technology on AWS. Over the past two years, our team has learned a great deal about what it takes to build a Serverless platform - what it means to be Serverless-first, what works well, what doesn't, and most importantly, how to fully embrace the Serverless offering on AWS.

The official vision for Stroll is:

A digitally led broking business, combining innovative technology with industry-leading expertise to deliver exceptional customer experiences. Along the way, Stroll is transforming how people buy insurance and manage their policy, starting with the car insurance segment with a clear vision of building into other areas and across new territories.

The innovative technology mentioned in this vision is a shiny new car insurance platform built with managed services on AWS. From the beginning we wanted to build it using Serverless technology.

Serverless has grown to mean many things and can even mean something different depending on which cloud provider you are paying for. For our team and for the purpose of this article, Serverless is a way of building and running applications without having to manage the underlying infrastructure. Of course there are still servers running in a data centre somewhere but those servers are not our responsibility. With Serverless we as developers can focus on our core product instead of worrying about managing and operating servers or runtimes.

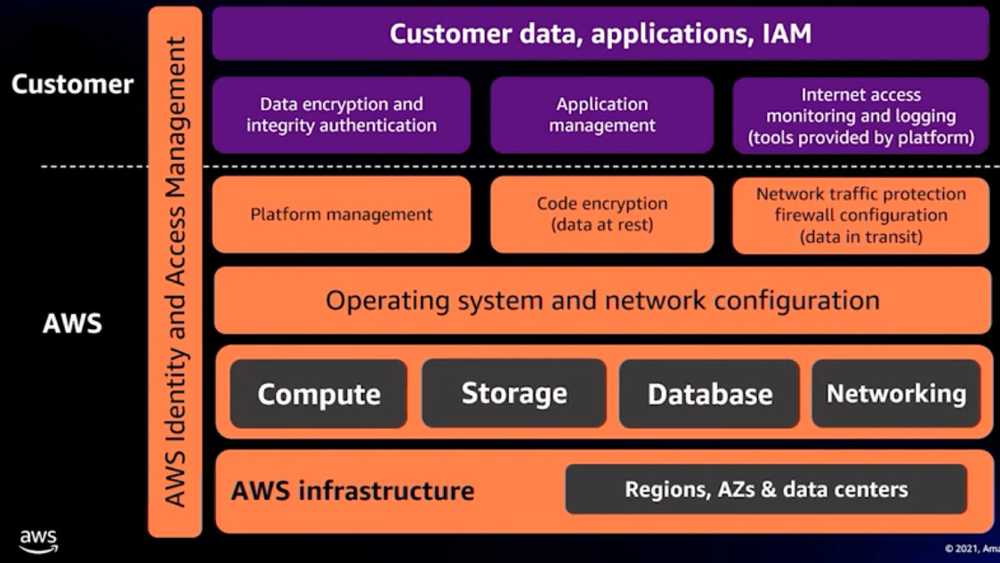

AWS Shared Responsibility Model

AWS Shared Responsibility Model

Most cloud providers have this concept of a shared responsibility model which breaks down what your responsibility is as a customer of AWS but also what AWS will take responsibility for. What we want to do is move that dotted line up as much as possible and allow AWS to do the “undifferentiated heavy lifting” (the stuff that adds no value to the product) for us.

The benefits of Serverless are clear:

- No infrastructure provisioning or maintenance

- Automatic scaling

- Pay for what you use

- Highly available and secure

Through this series of blog posts, we're excited to share our team's Serverless journey with you and hope you learn something useful regardless of your favourite cloud provider.

Phase 1 - The Lambdalith

When I joined Instil 4 years ago one of the things I noticed right away was their focus on quality. Testing was at the core of every project you quickly buy in and appreciate the benefits of test driven development and automated testing.

This project was no different. I can say with the utmost confidence that my colleagues had good unit testing in from the very start.

But there was one mindset that we started with that needed to change and that was the ability to build, run and test the backend locally.

It would be madness to not be able to run the entire stack on your machine right?!

However when you are building Serverless applications you really need to switch your mindset from running locally to running in the cloud. This will have a profound impact on not only how you verify that your application is working correctly but also how you architect the solution.

This project started off treating AWS Lambda as just another way to get some compute in the cloud. Yes we were using a Lambda function, but really it could have easily been a docker container or an EC2 instance instead.

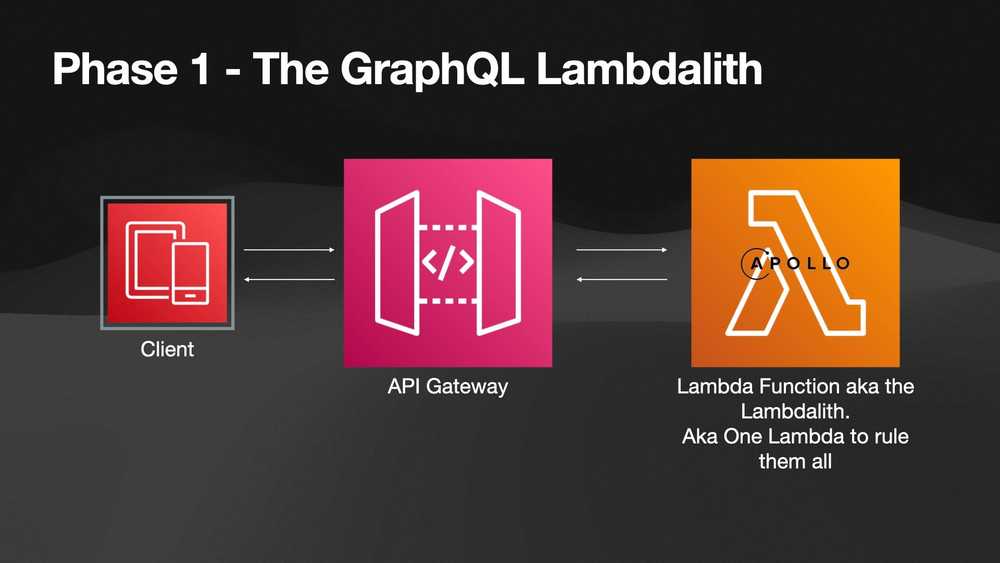

The team had built what you could call a Lambdalith. A single Lambda running Apollo server and resolving all of the GraphQL mutations and queries within that single Lambda. Now why do such a thing?

-

The entire backend can be run locally: click the green play button in your IDE, or run a terminal command and off you go. You can have your client talking to the backend in no time.

-

The solution is more portable: there is less chance of “vendor lock-in”, meaning if AWS suddenly gets too expensive you can move to another provider with less effort.

And yes, these are valuable things, but what are the downsides?

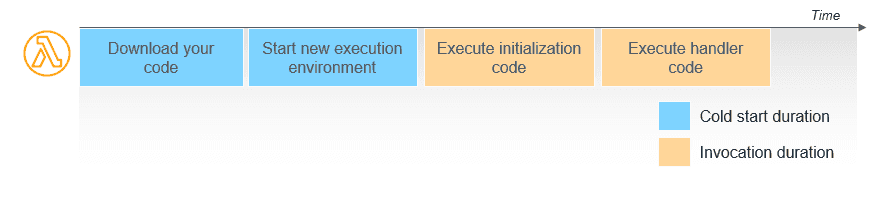

Spend anytime investigating AWS Lambda and you will most likely encounter the term “cold start”. Your functions will shutdown if they haven’t been invoked in a while so there is a period of time when they need to initialise the underlying runtime, load your function code and then execute it.

Source: https://aws.amazon.com/blogs/compute/operating-lambda-performance-optimization-part-1/

Source: https://aws.amazon.com/blogs/compute/operating-lambda-performance-optimization-part-1/

In the early days of Lambda this cold start introduced so much latency that it ruled the service out for many teams. However, because it’s a managed service, AWS have been able to optimise this process and you essentially get those optimisations for “free” without needing to make any code changes. Because of our decision to create a Lambdalith, we were doing some heavy lifting of our own after already incurring a cold start penalty. Namely the initialisation of Apollo server. The desire to test and run locally caused us to incur a performance hit in production!

The importance of testing locally forced the team to make an important architectural decision of a Lambdalith.

This not only meant that we were taking a performance and cost hit but we also were not making full use of other AWS managed services like AppSync (AWS' managed GraphQL Service).

So, how do we make testing in the cloud easier for developers?

-

Ensure that every developer has their own AWS account. This is crucial, having a sandboxed environment makes it easy for developers to not only test their code but also test their deployments as well. You could have one shared developer account and prefix resources with developer names or something like that but this is just asking for some kind of conflict or accidental deletion.

-

Make the deployment process fast. We make heavy use of the hotswap and watch functionality in CDK, enabling developers to hot-reload their changes automatically.

-

If you need an even shorter feedback loop, consider invoking your Lambda code locally. It’s just a function after all and AWS services are accessed using HTTP APIs. You just need to make sure you have the correct permissions and resources deployed.

I like to think of this as phase one of the project. It worked, it was built using Serverless technologies but it had much room for improvement. The team realised that this approach was not going to work going forward and decided to replace Apollo server with AppSync, a good decision as we no longer had to worry about maintaining our own Apollo server and could instead hand that responsibility over to AWS. This change also enabled us to break down the Lambdalith into smaller domain based services instead, which we will cover in our next post.